The concept of accessibility as applied to this project was in connection with the ability of hearing impaired learners to access learning events that were primarily presented in audio. It is ironic that this giant leap forward for the vision impaired was a step backwards for the hearing impaired. Prior to the interest in presenting audio materials on the web, this medium was a great boon for the deaf community.

George Buys, a blind software developer who developed the Talking Communities virtual classroom (otherwise known as iVocalize or Alado), says that making audio input accessible for the hearing impaired is one of the more challenging issues facing web developers.

At this point in time, there are a couple of courses of action, neither of them totally satisfactory.

1) Voice Recognition Software

Examples: Dragon Naturally Speaking, Via Voice

This kind of technology is reasonably well known and quite common. Voice recognition software is that kind of software that can be trained to recognise an individual users voice and transcribe the spoken utterances of that voice into written (typed) text. In a mattter of hours users report a 90% accuracy rate for this remarkable software.

This may be useful when composing an audio file for a voice email or voice board (asynchronous media), but won't work in a live synchronous setting as voice recognition software is trained to transcribe one voice, and one voice only, and the mix of different vocal inputs present in a typical synchronous interaction would render the output of voice recognition software unintelligible. Even if each user in a synchronous environment was using their own specially trained software to transcribe their own voice, it is not practical to set up a dialogue where you can hear the voices of the other participants in say, an online chat, but the software is shielded from those other voices.

Another disadvantage of voice recognition software is that its final output is a written text only. Spoken text is not retained, and ideally what is needed is software that produces an oral and written output in tandem.

2) Closed Captioning

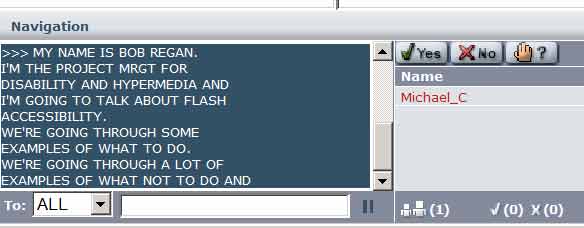

When I first saw this approach in action I was very impressed. As the speaker at the conference worked through their presentation the entire text of their speech was appearing on a separate screen in real time with stunning speed and accuracy. I thought that it was being magically transcribed by some superb state of the art machine somewhere. It was, but that machine was human. What was happening was that a highly paid speed typist was writing every word virtually as soon as it was uttered.

There are closed captioning services (Caption IT, Caption Colorado, Caption First) that will offer to be present in a live voice chat or conference presentation to provide such synchronous typing, but they don't come cheap. A$200 is the cheapest I have been able to find. And no such services exist in Australia at present. This A$200/hr rate is the same whether you ask the captioning service to be present in real time or, as more typically is the case, the transcribing of the spoken dialogue takes place after the fact.

Some companies, notably Horizon Wimba with their Live Classroom, have arrangements with specific closed captioning services at reduced rates. Tools like Live Classroom archive all the content from live sessions, and if an archive has been targeted for close captioning (it does not happen automatically), the third party closed captioning service provides a written transcript of the spoken dialogue for inclusion in the archive. The hearing impaired viewer, when listening to the archive, simply types /cc to call up the close captioning provided for the archive and they can read every spoken word.

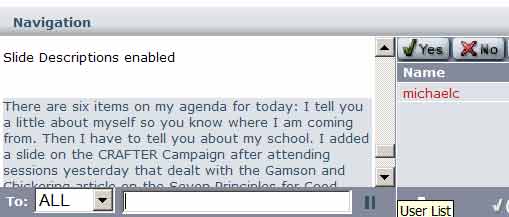

Live Classroom provides another service to aid the hearing impaired participant in live sessions and archives. If presenters are using Powerpoint to design content for their sessions they can use the notes tool (that facility where the presenter writes a note of explanation for each slide) to ensure that some written text appears in the text chat area as s/he speaks. This requires that what is written in the notes view for each slide becomes in effect a script that closely corresponds to what you actually say. The listener, either in live or delayed, asynchronous mode simply types /sd to be able to view the spoken comments of the presenter as written text.

Elluminate

from the Elluminate website:

Accessibility at a

Glance

Elluminate Live! provides the ultimate in flexibility, enabling each individual

user to experience the live eLearning and Web collaboration environment

in the best way possible. Accessibility features include:

* Multiple streams of closed captioning

From the White Paper:

Support for Closed Captioning: Elluminate has written a special utility which allows all audio content to be closed captioned for hearing impaired participants. The Elluminate Closed Captioning utility allows for the captioning to be provided by multiple providers which allows for Priority 3 features such as captioning the same session in multiple languages allowing participants to subscribe to their preferred captioning provider.

So - who organises the cc provider? The user or the organisation hosting the event? And who pays?

* Enlarged, easier-to-see video

* Activation of menus and dialogs via short-cut keys

* Ability to inherit user-defined color schemes from operating system

* Scalability of visual content, such as direct messaging

* Auditory notification of certain events, such as new user joining session

* Magnifier for application sharing

* Customized content selection

* User controlled interface layout

* Hot key to terminate application sharing

* Java Accessibility Bridge for screen readers, like JAWS and Narrator

More From the White Paper:

Support for Screen Readers: Elluminate allows an easy install version of the Java

Accessibility Bridge to be downloaded from our Support site

(http://www.elluminate.com/support). This software allows screen readers such as JAWS to read the interface and provide auditory cues about the software functionality to visually impaired participants. All menus and dialog boxes are readable by the screen reader.

So I figure then

the screen reader cannot read the text chat?

* Capture and replay of entire sessions

Other Kinds of Accessibility

Voice as a Solo Pursuit

There is another aspect of accessibility that needs to be considered when approaching the use of web based voice tools. In my observations of students using voice tools throughout this project it has been apparent that most of them do not have access to a private space where they can contribute to voice based events in private. Most computers in institutions (where many students typically work on computers) are in public spaces. Contributing in writing is a silent activity and can be done without fuss in a public space. This is not the case with voice tools. Contributing with your voice means you can be heard by others sharing that public space and may disrupt them. It also has the effect of hampering free communications by the participant, as those randomly gathered around are not your intended audience, and it can be embarrassing to be sitting at a computer, albeit with a headset, seemingly talking to oneself. This has quite drastic consequences for the take up and effective use of voice tools in educational environments. Students may need access to computers in relatively private or sound-proofed areas to feel totally comfortable contributing to voice based online events.

(also access to headsets, mics, expertise)